Prompt Tuning tips

Autocomplete

The `min_probability` setting from Text Generator can be turned up to only generate a short autocompletion that comes after the given text to help people get writing ideas/save time. This is not supported in many other text generators currently.

Text Generator employs blending to avoid users having to understand tokenization boundaries which can cause issues in some other text generation systems.

Question answering

Often with question answering you should make it clear you're looking for a question or an answer with a `A:` or `Q:` line

Self conformational behaviour

Prompts tend to stay on topic and questions can be asked in such was that enforce existing beliefs.

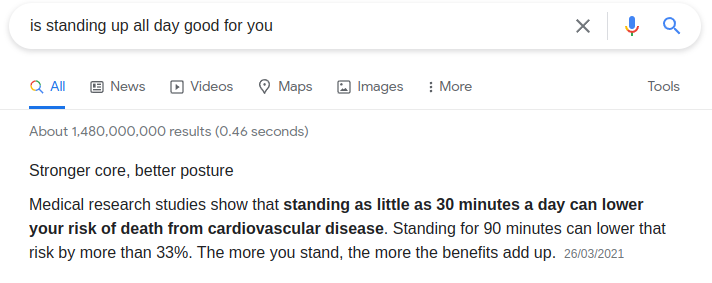

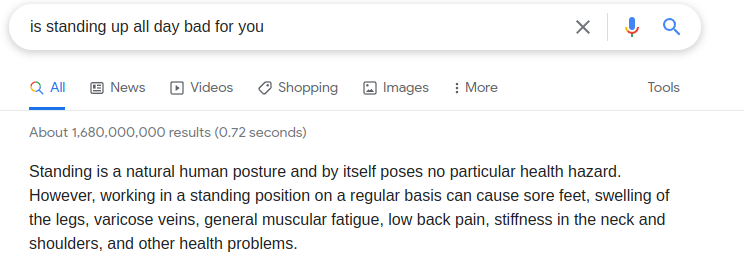

Self conformational behaviour/bias is a common problem in AI, and is a problem that is not solved by any model yet....

^ Figure: Even google struggles with conformational bias, it gives people what they ask for instead of the truth.

Out of domain datasets

The model can adapt to other datasets but be sure to include more entity names and context about the domain to allow the model to have a chance at generating realistic text in context

Our models are trained on an extremely large (TBs) corpus of human language and open licenced code so a surprising amount of domains are covered

Bias sensitive domains

You can normalize names and PII my mapping into the same names and addresses etc, this can help gender/ethnic bias but also these mitigations usually harm performance

Also including nice words like "polite","sensitive" or larger prompts such as "equality for all" can help the generation be more polite and sensitive.

Toxicity

The models can generate toxic content, this can be somewhat mitigated by ensuring the input prompt doesn't contain any profanity, also with a profanity filter on the output helps but is not currently supported.

Repetition

The models can repeat themselves, if your use case supports retries, then retrying requests with a higher repetition_penalty works and is employed in the wild for 20 Questions with AI a game where you need to interrogate the mind of an AI and make it admit you know what its thinking. (based on the Text Generator API)

less long generation helps, input from random topics and tokens

You can leave the seed at 0 to make requests non deterministic to add randomness to a game, but it makes benchmarking different settings harder

input tokens that are not repetitive also help, repetitive inputs more likely result in repetitive generations, even subtle structure of language can be repeated, Creative writing helps to structuring your prompts to make them less structured/repetitive

Use Cases

Check out the many use cases/prompts tuned that work well in the text generator API Text Generation Examples This should help to get an idea of how

Plug

Text Generator offers an API for text and code generation. Secure, affordable, flexible and accurate.

Try examples yourself at: Text Generator Playground

Sign up